NMF

One of the ways in which Ecco tries to make Transformer language models more transparent is by making it easier to examine the neuron activations in the feed-forward neural network sublayer of Transformer blocks. Large language models can have up to billions of neurons. Direct examination of these neurons is not always insightful because their firing is sparse, there's a lot of redundancy, and their number makes it hard to extract a signal.

Matrix decomposition methods can give us a glimpse into the underlying patterns in neuron firing. From these methods, Ecco currently provides easy access to Non-negative Matrix Factorization (NMF).

NMF

Conducts NMF and holds the models and components

__init__(self, activations, n_input_tokens=0, token_ids=tensor([]), _path='', n_components=10, from_layer=None, to_layer=None, tokens=None, collect_activations_layer_nums=None, **kwargs)

special

Receives a neuron activations tensor from OutputSeq and decomposes it using NMF into the number

of components specified by n_components. For example, a model like distilgpt2 has 18,000+

neurons. Using NMF to reduce them to 32 components can reveal interesting underlying firing

patterns.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

activations |

Dict[str, numpy.ndarray] |

Activations tensor. Dimensions: (batch, layer, neuron, position) |

required |

n_input_tokens |

int |

Number of input tokens. |

0 |

token_ids |

Tensor |

List of tokens ids. |

tensor([]) |

_path |

str |

Disk path to find javascript that create interactive explorables |

'' |

n_components |

int |

Number of components/factors to reduce the neuron factors to. |

10 |

tokens |

Optional[List[str]] |

The text of each token. |

None |

collect_activations_layer_nums |

Optional[List[int]] |

The list of layer ids whose activtions were collected. If |

None |

Source code in ecco/output.py

def __init__(self, activations: Dict[str, np.ndarray],

n_input_tokens: int = 0,

token_ids: torch.Tensor = torch.Tensor(0),

_path: str = '',

n_components: int = 10,

from_layer: Optional[int] = None,

to_layer: Optional[int] = None,

tokens: Optional[List[str]] = None,

collect_activations_layer_nums: Optional[List[int]] = None,

**kwargs):

"""

Receives a neuron activations tensor from OutputSeq and decomposes it using NMF into the number

of components specified by `n_components`. For example, a model like `distilgpt2` has 18,000+

neurons. Using NMF to reduce them to 32 components can reveal interesting underlying firing

patterns.

Args:

activations: Activations tensor. Dimensions: (batch, layer, neuron, position)

n_input_tokens: Number of input tokens.

token_ids: List of tokens ids.

_path: Disk path to find javascript that create interactive explorables

n_components: Number of components/factors to reduce the neuron factors to.

tokens: The text of each token.

collect_activations_layer_nums: The list of layer ids whose activtions were collected. If

None, then all layers were collected.

"""

if activations == []:

raise ValueError(f"No activation data found. Make sure 'activations=True' was passed to "

f"ecco.from_pretrained().")

self._path = _path

self.token_ids = token_ids

self.n_input_tokens = n_input_tokens

# Joining Encoder and Decoder (if exists) together

activations = np.concatenate(list(activations.values()), axis=-1)

merged_act = self.reshape_activations(activations,

from_layer,

to_layer,

collect_activations_layer_nums)

# 'merged_act' is now ( neuron (and layer), position (and batch) )

activations = merged_act

self.tokens = tokens

# Run NMF. 'activations' is neuron activations shaped (neurons (and layers), positions (and batches))

n_output_tokens = activations.shape[-1]

n_layers = activations.shape[0]

n_components = min([n_components, n_output_tokens])

components = np.zeros((n_layers, n_components, n_output_tokens))

models = []

# Get rid of negative activation values

# (There are some, because GPT2 uses GELU, which allow small negative values)

self.activations = np.maximum(activations, 0).T

self.model = decomposition.NMF(n_components=n_components,

init='random',

random_state=0,

max_iter=500)

self.components = self.model.fit_transform(self.activations).T

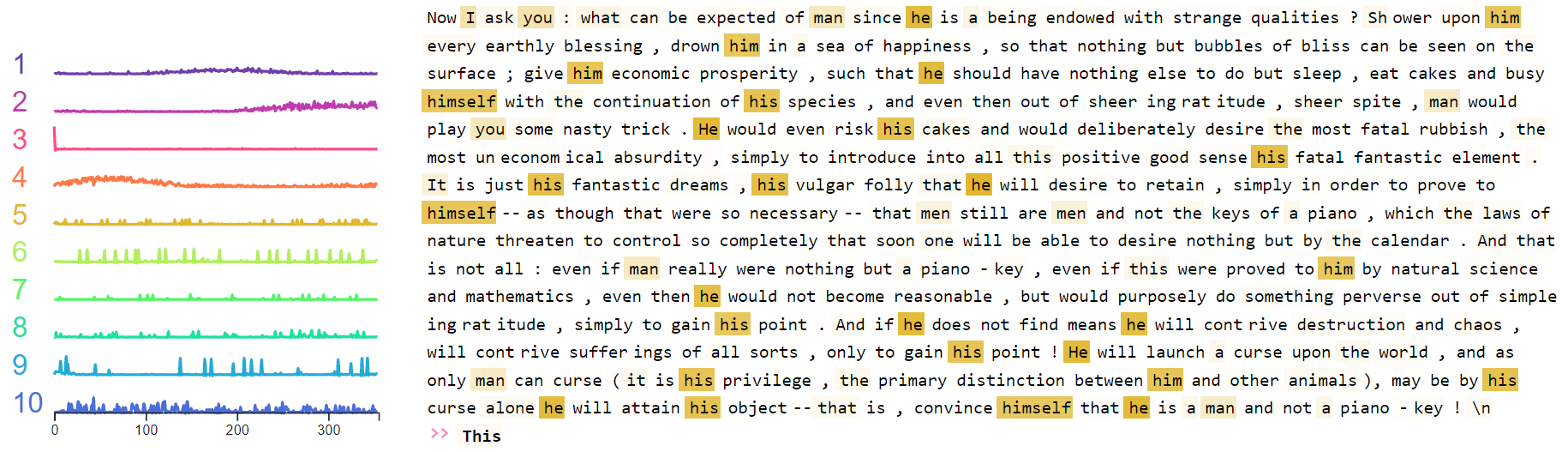

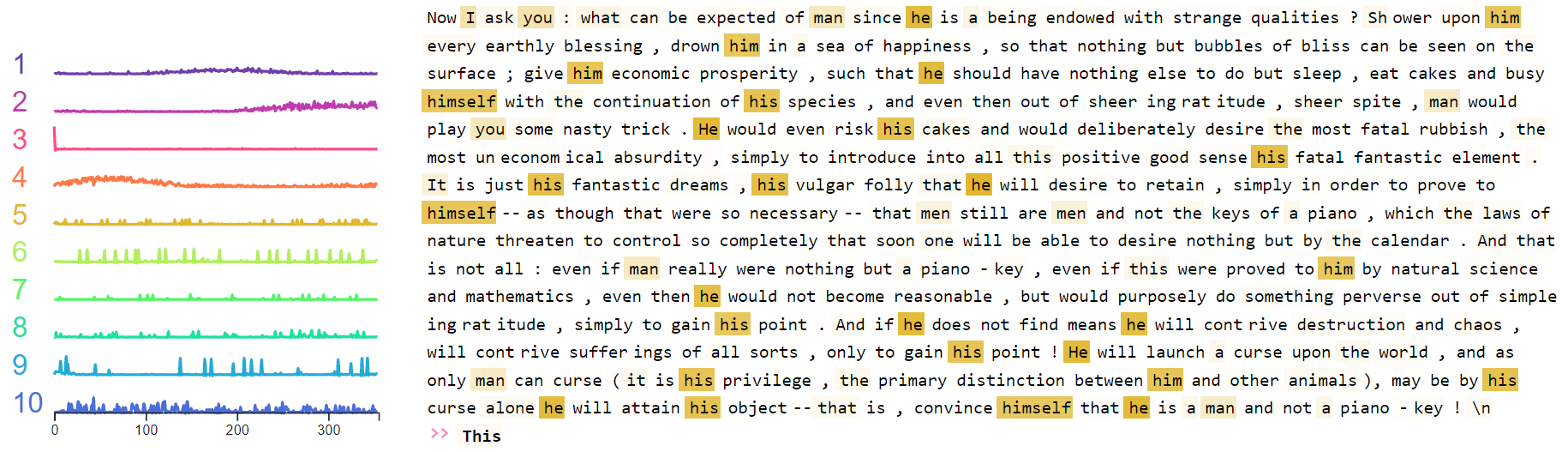

explore(self, input_sequence=0, **kwargs)

Show interactive explorable for a single sequence with sparklines to isolate factors.

Examples:

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

input_sequence |

int |

Which sequence in the batch to show. |

0 |

Source code in ecco/output.py

def explore(self, input_sequence: int = 0, **kwargs):

"""

Show interactive explorable for a single sequence with sparklines to isolate factors.

Example:

Args:

input_sequence: Which sequence in the batch to show.

"""

tokens = []

for idx, token in enumerate(self.tokens[input_sequence]): # self.tokens[:-1]

type = "input" if idx < self.n_input_tokens else 'output'

tokens.append({'token': token,

'token_id': int(self.token_ids[input_sequence][idx]),

# 'token_id': int(self.token_ids[idx]),

'type': type,

# 'value': str(components[0][comp_num][idx]), # because json complains of floats

'position': idx

})

# If the sequence contains both input and generated tokens:

# Duplicate the factor at index 'n_input_tokens'. THis way

# each token has an activation value (instead of having one activation less than tokens)

# But with different meanings: For inputs, the activation is a response

# For outputs, the activation is a cause

if len(self.token_ids[input_sequence]) != self.n_input_tokens:

# Case: Generation. Duplicate value of last input token.

factors = np.array(

[np.concatenate([comp[:self.n_input_tokens], comp[self.n_input_tokens - 1:]]) for comp in

self.components])

factors = [comp.tolist() for comp in factors] # the json conversion needs this

else:

# Case: no generation

factors = [comp.tolist() for comp in self.components] # the json conversion needs this

data = {

# A list of dicts. Each in the shape {

# Example: [{'token': 'by', 'token_id': 2011, 'type': 'input', 'position': 235}]

'tokens': tokens,

# Three-dimensional list. Shape: (1, factors, sequence length)

'factors': [factors]

}

d.display(d.HTML(filename=os.path.join(self._path, "html", "setup.html")))

d.display(d.HTML(filename=os.path.join(self._path, "html", "basic.html")))

viz_id = 'viz_{}'.format(round(random.random() * 1000000))

# print(data)

js = """

requirejs(['basic', 'ecco'], function(basic, ecco){{

const viz_id = basic.init()

ecco.interactiveTokensAndFactorSparklines(viz_id, {})

}}, function (err) {{

console.log(err);

}})""".format(data)

d.display(d.Javascript(js))

if 'printJson' in kwargs and kwargs['printJson']:

print(data)

return data

reshape_activations(activations, from_layer=None, to_layer=None, collect_activations_layer_nums=None)

staticmethod

Prepares the activations tensor for NMF by reshaping it from four dimensions (batch, layer, neuron, position) down to two: ( neuron (and layer), position (and batch) ).

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

activations |

tensor |

activations tensors of shape (batch, layers, neurons, positions) and float values |

required |

from_layer |

Optional[int] |

Start value. Used to indicate a range of layers whose activations are to be processed |

None |

to_layer |

Optional[int] |

End value. Used to indicate a range of layers |

None |

collect_activations_layer_nums |

Optional[List[int]] |

A list of layer IDs. Used to indicate specific layers whose activations are to be processed |

None |

Source code in ecco/output.py

@staticmethod

def reshape_activations(activations,

from_layer: Optional[int] = None,

to_layer: Optional[int] = None,

collect_activations_layer_nums: Optional[List[int]] = None):

"""Prepares the activations tensor for NMF by reshaping it from four dimensions

(batch, layer, neuron, position) down to two:

( neuron (and layer), position (and batch) ).

Args:

activations (tensor): activations tensors of shape (batch, layers, neurons, positions) and float values

from_layer (int or None): Start value. Used to indicate a range of layers whose activations are to

be processed

to_layer (int or None): End value. Used to indicate a range of layers

collect_activations_layer_nums (list of ints or None): A list of layer IDs. Used to indicate specific

layers whose activations are to be processed

"""

if len(activations.shape) != 4:

raise ValueError(f"The 'activations' parameter should have four dimensions: "

f"(batch, layers, neurons, positions). "

f"Supplied dimensions: {activations.shape}", 'activations')

if collect_activations_layer_nums is None:

collect_activations_layer_nums = list(range(activations.shape[1]))

layer_nums_to_row_ixs = {layer_num: i

for i, layer_num in enumerate(collect_activations_layer_nums)}

if from_layer is not None or to_layer is not None:

from_layer = from_layer if from_layer is not None else 0

to_layer = to_layer if to_layer is not None else activations.shape[0]

if from_layer == to_layer:

raise ValueError(f"from_layer ({from_layer}) and to_layer ({to_layer}) cannot be the same value. "

"They must be apart by at least one to allow for a layer of activations.")

if from_layer > to_layer:

raise ValueError(f"from_layer ({from_layer}) cannot be larger than to_layer ({to_layer}).")

layer_nums = list(range(from_layer, to_layer))

else:

layer_nums = sorted(layer_nums_to_row_ixs.keys())

if any([num not in layer_nums_to_row_ixs for num in layer_nums]):

available = sorted(layer_nums_to_row_ixs.keys())

raise ValueError(f"Not all layers between from_layer ({from_layer}) and to_layer ({to_layer}) "

f"have recorded activations. Layers with recorded activations are: {available}")

row_ixs = [layer_nums_to_row_ixs[layer_num] for layer_num in layer_nums]

activation_rows = [activations[:, row_ix] for row_ix in row_ixs]

# Merge 'layers' and 'neuron' dimensions. Sending activations down from

# (batch, layer, neuron, position) to (batch, neuron, position)

merged_act = np.concatenate(activation_rows, axis=1)

# merged_act = np.stack(activation_rows, axis=1)

# 'merged_act' is now (batch, neuron (and layer), position)

merged_act = merged_act.swapaxes(0, 1)

# 'merged_act' is now (neuron (and layer), batch, position)

merged_act = merged_act.reshape(merged_act.shape[0], -1)

return merged_act